The advanced chatbot, ChatGPT-4, has been one of the big news stories of the year so far, understandably as its ingenious uses continue to impress. Unfortunately however, it’s not all positives: the chatbot can also be used for various malicious purposes. Researchers have warned that cybercriminals can use ChatGPT to compose the text for phishing emails — meaning more phishing emails and more cyberthreats. Then there’s the problem of fake ChatGPT apps, websites, and associated malware, which we’ve previously reported on.

This week we’ve discovered yet more ChatGPT-4 phishing attacks and other threats. Read on for the low-down.

ChatGPT-4 Phishing Websites

AI Pro

![ChatGPT-4 Phishing Websites_AI Pro website- getaipro[.]live_20230429](https://news.trendmicro.com/api/wp-content/uploads/2023/04/ChatGPT-4-Phishing-Websites_AI-Pro-website-getaipro.live_20230429-1024x561.jpg)

Ink AI

![ChatGPT-4 Phishing Websites_Ink AI website- getinkai[.]com:discount _20230429](https://news.trendmicro.com/api/wp-content/uploads/2023/04/ChatGPT-4-Phishing-Websites_Ink-AI-website-getinkai.comdiscount-_20230429-1024x532.jpg)

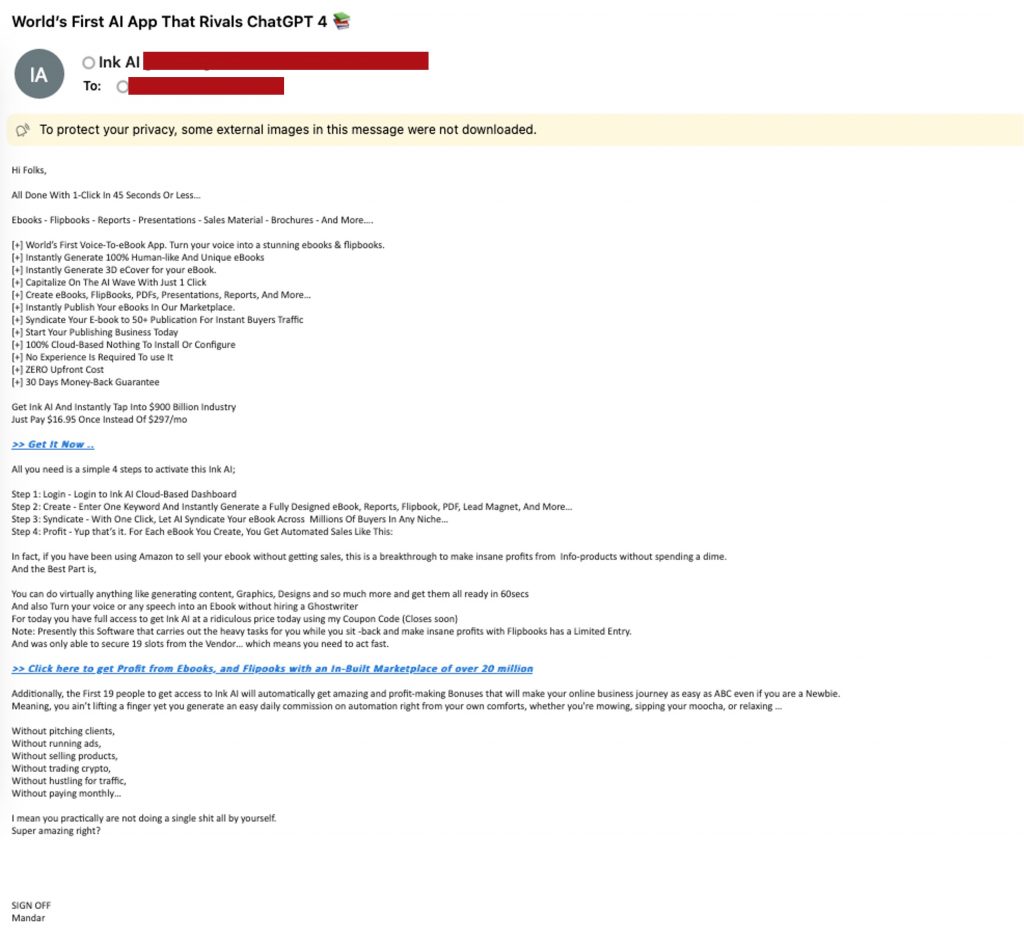

Both AI Pro and Ink AI are highly suspect and operate in the same way: a chaotic phishing email arrives in your inbox, promising a new AI chatbot that has seemingly appeared out of nowhere but is supposedly superior to ChatGPT. Both AI Pro and Ink AI claim to be able to do all that ChatGPT can do — and more! You may wonder then, why you’ve never heard of either in the news: that would be a good question.

If you click on the phishing links in the emails, you’ll then be taken to the websites seen above, which appear to have been designed by a lunatic with rainbows for eyes. Aside from the randomness and optics, there are other red flags that suggest these two websites are scams:

- The websites were only created last month.

- There is no support available — or contact details.

- There is a proliferation of grammatical errors and strange word choice, especially in the emails.

- There are exaggerated claims, and strangely precise — yet completely hypothetical — figures, such as the $569.56 claim, and the 45 seconds mentioned by both.

- Both AI Pro and Ink AI appear to share duplicated content.

Our advice? Stay away from emails and websites like these, and stick to OpenAI’s official ChatGPT or other reputable chatbots, such as Google’s Bard.

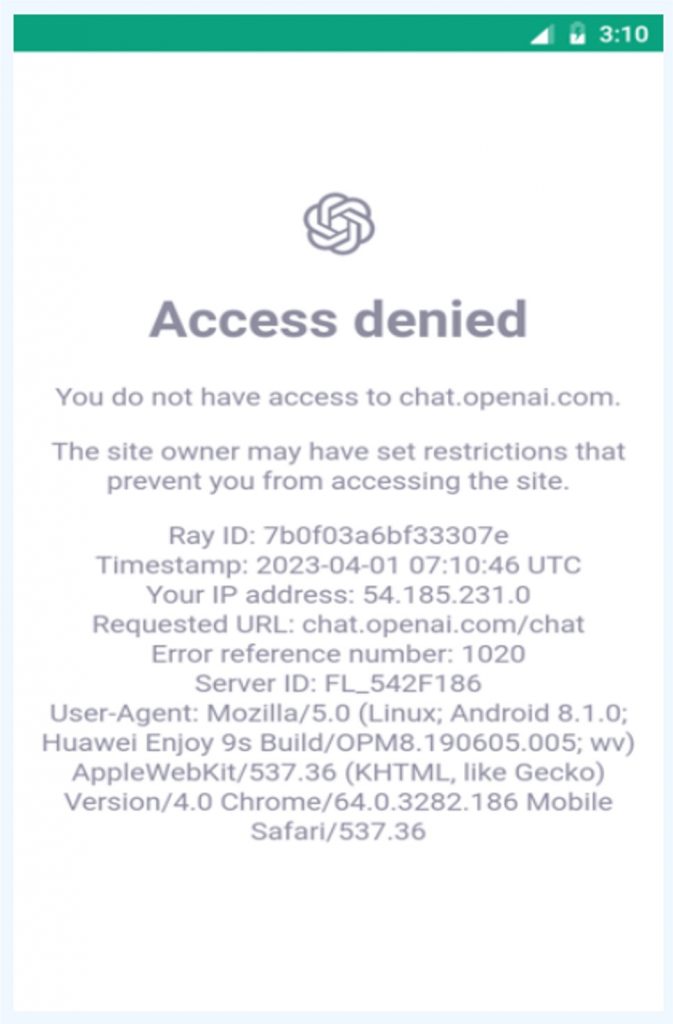

ChatGPT-4 “Banker” Phishing Attack

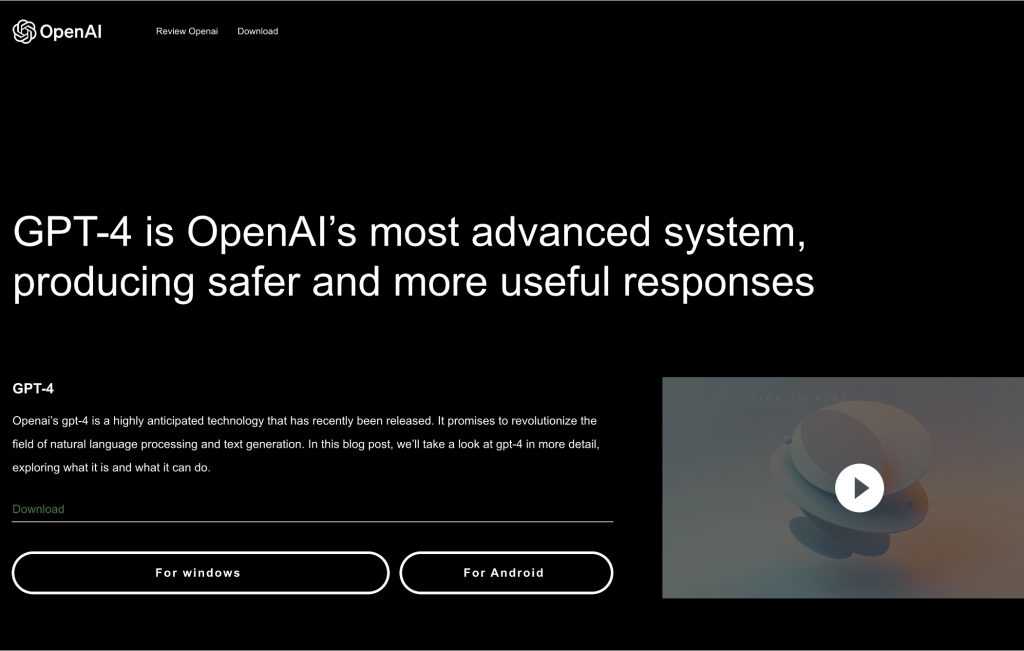

The ChatGPT “Banker” phishing attack involves fake webpages and a trojan virus. Would-be victims are deceived by malicious websites impersonating ChatGPT, such as the below.

A phishing lure will be used, in this case a request for service permissions, to entrap the victim. If the victim complies, an Android banking trojan will be downloaded onto the victim’s device, at which point the cybercriminal can steal financial credentials.

Be on the lookout for these dangerous ChatGPT phishing websites:

- openai-pro[.]com

- pro-openai[.]com

- openai-news[.]com

- openai-new[.]com

- openai-application[.]com

Other threats we’ve seen include:

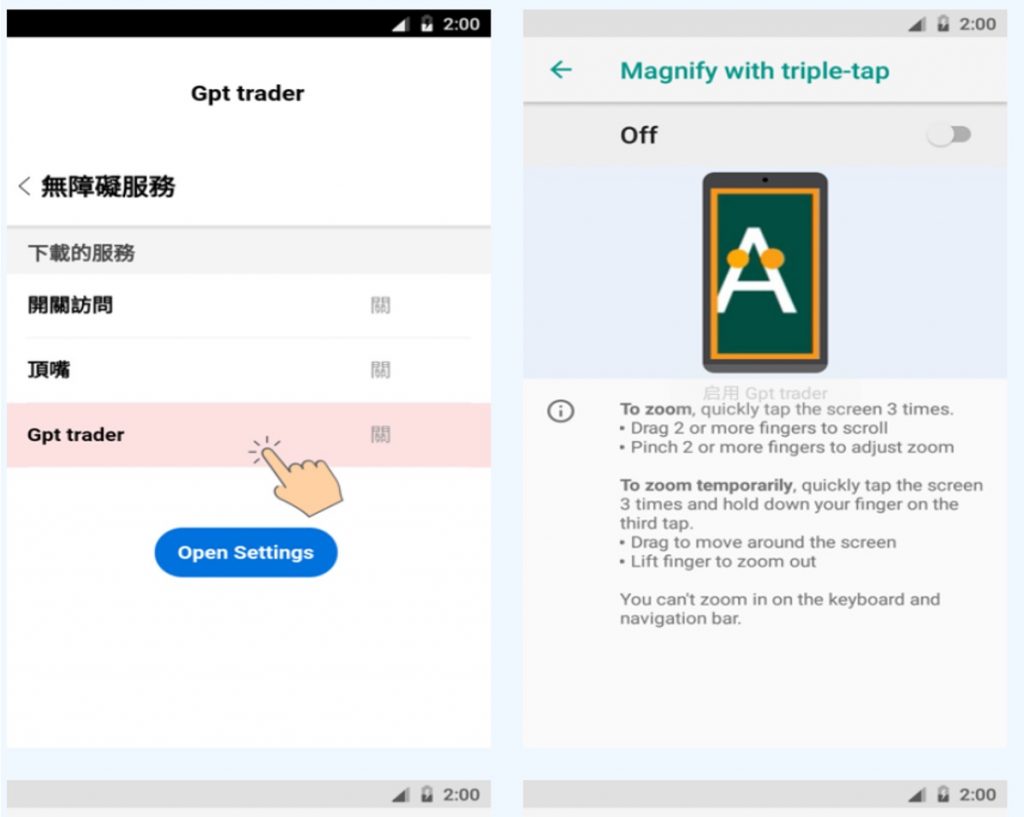

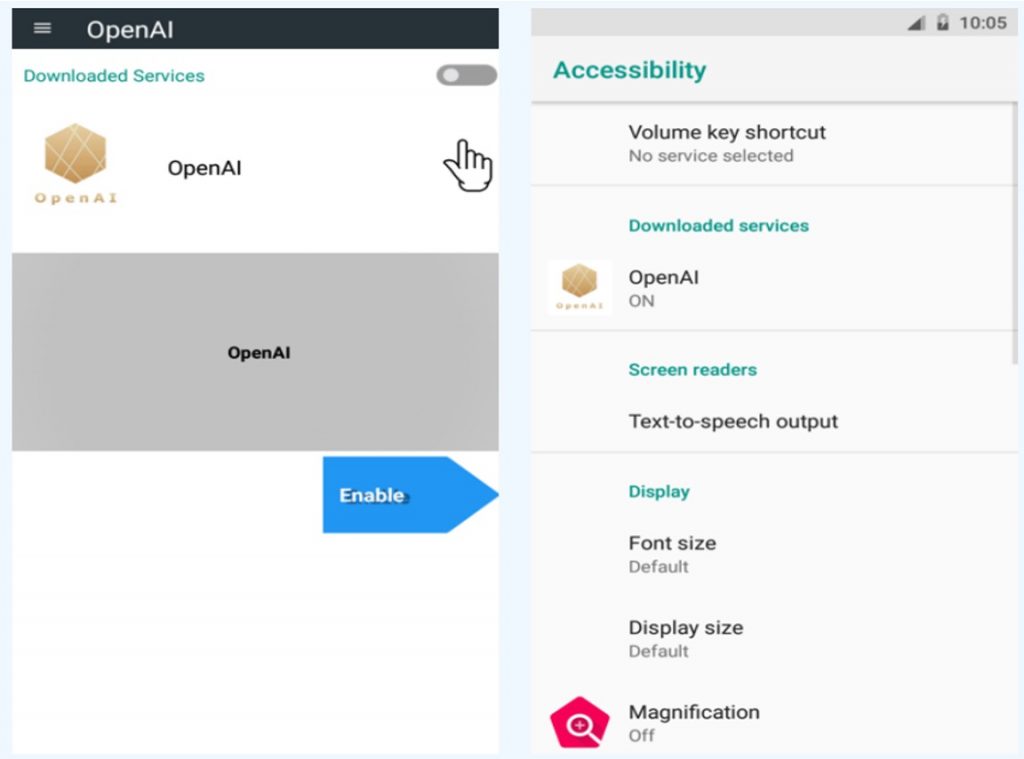

Backdoor ChatGPT Threat

Opens a limited webpage of OpenAI and demands remote control access of a victim’s device.

Spyware ChatGPT Threat

Utilizes malicious fake apps that request excessive device permissions and then install spyware in order to steal personal credentials.

Billfraud ChatGPT Threat

Discreetly subscribes its target to various premium services through SMS billing fraud.

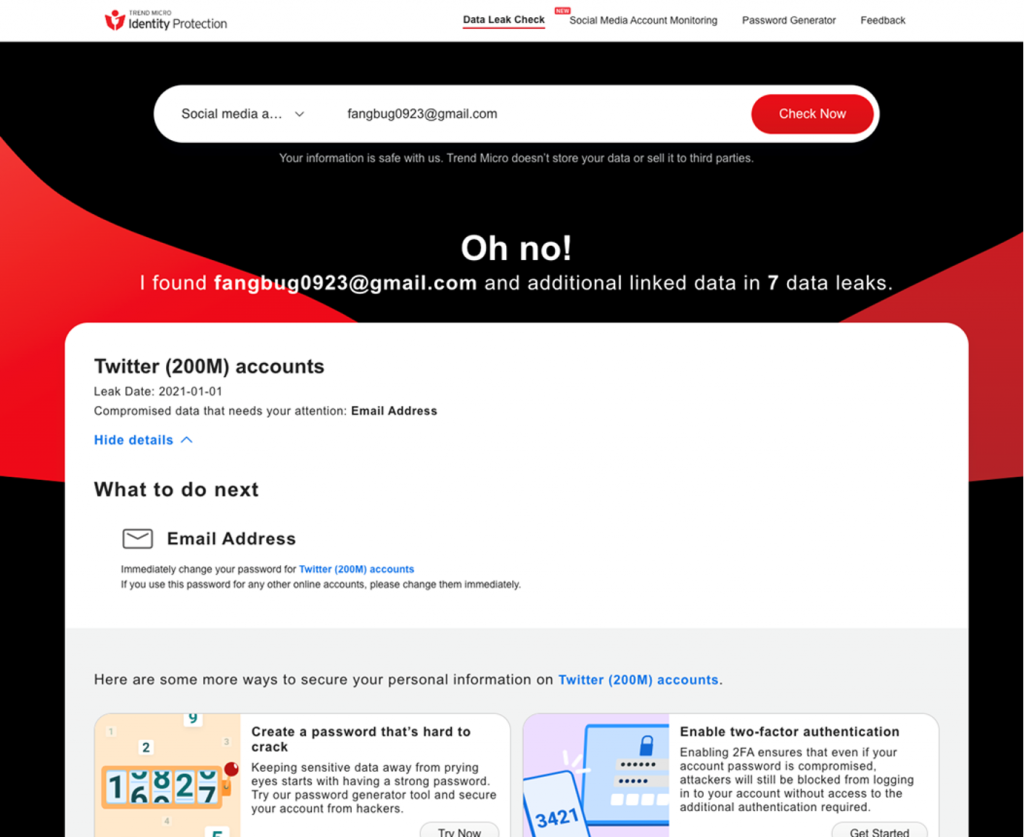

Protecting Your Social Media and Personal Info

We would encourage readers to head over to our new FREE ID Protection platform, which has been designed to meet challenges such as those above. With it, you can secure your social media accounts with our Social Media Account Monitoring tool, with which you’ll receive a personal report:

Aside from this, you can also:

- Check to see if your data (email, number, password, social media) has been exposed in a leak,

- Receive the strongest tough-to-hack password suggestions from our advanced AI.

All this for free — give it a go today. As always, we hope this article has been an interesting and/or useful read. If so, please do SHARE it with family and friends to help keep the online community secure and informed — and consider leaving a like or comment below. Here’s to a secure 2023!

0 Comments

Other Topics