Deepfake videos, face-swapping, voice cloning… doesn’t it feel like in recent years there’s nothing we can trust anymore? Artificial Intelligence (AI) has fueled the development of deepfake technology, blurring the boundaries of what is real and what is fake. Keep reading to check the top deepfake scams you should watch out for in 2024.

Cybercriminals use deepfakes to impersonate celebrities, public figures, or corporate executives for various malicious purposes, causing severe consequences such as financial losses and reputational damage. Sextortion schemes, for example, have become more rampant than before due to AI. Deepfakes make it easier for criminals to create fake explicit content by manipulating victims’ social media photos and videos; they then use the content for sextortion and harassment, as the FBI have warned.

Deepfake Scams Are on the Rise

We conducted in-depth research on the malicious uses of AI with EUROPOL and the United Nations Interregional Crime and Justice Research Institute (UNICRI) back in 2020, and the trend is still rising.

According to the World Economic Forum, the amount of deepfake content increased by 900% between 2019 and 2020 alone. As AI continues to advance, experts suggest that by 2026 a vast majority of online content could be AI-generated, making it more challenging for people to verify the legitimacy of content in the future.

It is estimated that in 2023, 26% of small companies and 38% of large companies experienced deepfake-related fraud, leading to substantial monetary losses. Studies also show that at least 1 in 10 companies have been targeted by deepfakes.

Top Deepfake Scams 2024

Deepfake scams evolve as technology evolves, making it harder and harder for people to tell the real from the fake. Below is a list of top deepfake scams to watch out for:

#1 – Romance Scam

We’ve all heard about online romance scams in which scammers impersonate someone else, like a military service member based overseas, and ask for money online. While most of us think we know all the tricks and won’t fall victim, scammers are employing new tactics using advanced deepfake technology to exploit people.

Historically, one of the red flags of a romance scam is that the scammers won’t join a video call or meet you in person. However, with deepfake face-swapping apps, scammers can now get away with doing video calls — they can win your trust easily with a fake visual that makes you believe the person on the video call is real.

That’s how the “Yahoo Boys” upgraded their tactics. Notorious since the late 1990s, the Yahoo Boys used to send scammy Yahoo mails to carry out various scams like phishing and advanced-fee fraud. Today, the Yahoo Boys use fake video calls powered by deepfake technology, earning the trust of victims across dating sites and social media.

These types of deepfake romance scams can get pretty creative. In 2022, Chikae Ide, a Japanese Manga artist, revealed that she lost $75 million yen (almost half a million USD) to a fake “Mark Ruffalo” online. Although she was suspicious at first, it was the convincing deep fake video call that removed her doubts about transferring money.

#2 – Recruiting Scam

With deepfake technology, scammers can pose as ANYONE, for example, impersonating recruiters on popular job sites such as LinkedIn.

Scammers offer what may appear as a legitimate online job interview. They use deepfake audio and face swapping technology to convince you that the interviewer is from a legitimate employer. Once you receive confirmation of a job offer, you are asked to pay for the starter pack and asked to share your personal information such as bank details for salary set-up.

They also pose as interviewee candidates. The FBI warned that scammers may use deepfake technology and people’s stolen PII to create fake candidate profiles. Scammers apply for remote jobs, with the goal of accessing sensitive company customer and employee data, resulting in further exploitation.

#3 – Investment Scam

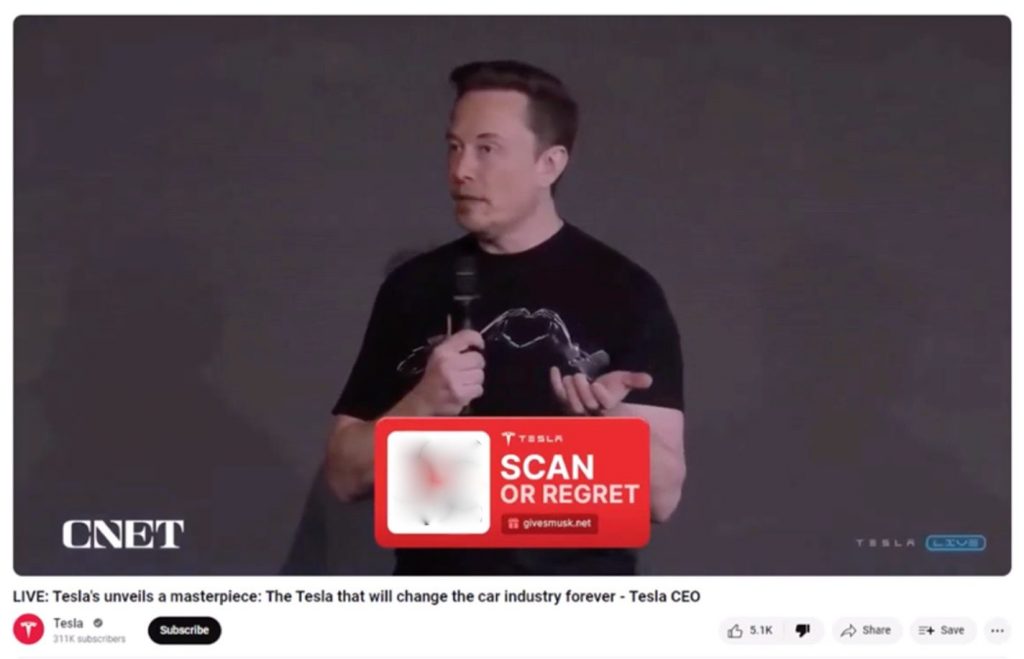

Deepfakes are also commonly used for fake celebrity endorsement in investment scams. In 2022, deepfake videos featuring Elon Musk giving away crypto tokens circulated online. These deepfakes advertise too-good-to-be-true investment opportunities and lead to malicious websites. Below is a recent example of a fake YouTube live stream of an Elon Musk deepfake promoting cryptocurrency airdrop opportunities.

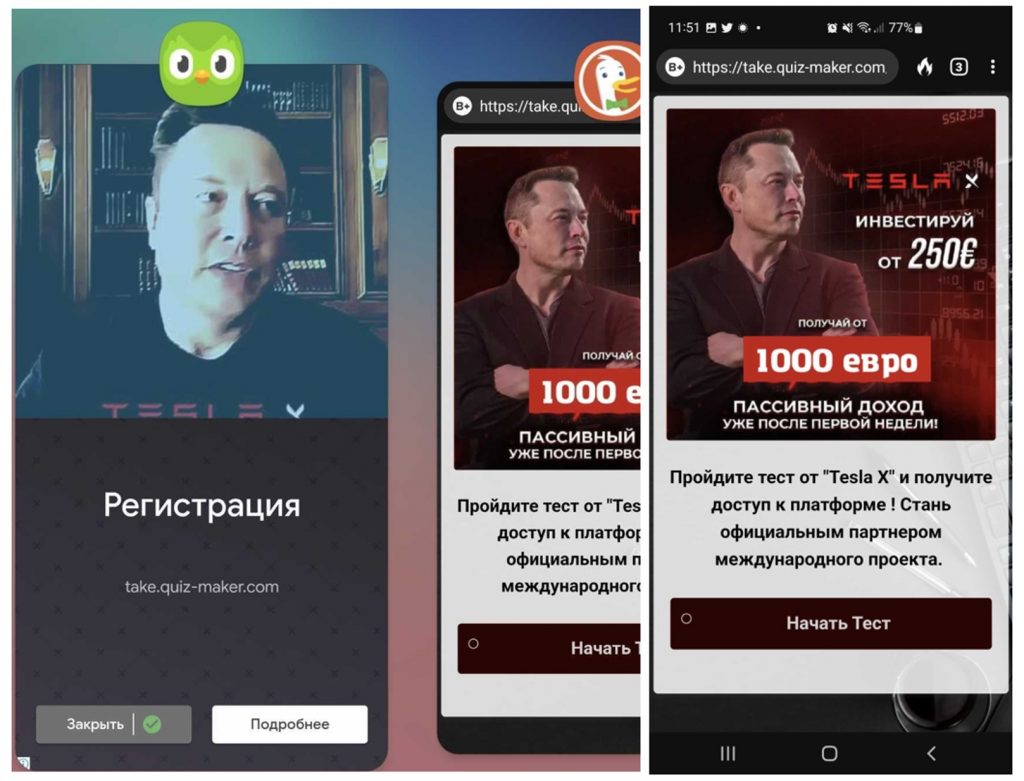

Even with legitimate and popular mobile applications there is the possiblity of deepfakes. Below is Elon Musk again promoting “financial investment opportunities” in an advertisement seen on Duolingo. If you fall for the scam and click on the ad, it leads you to a page that, when translated, offers “Investment opportunities; invest €250, earn from €1000.” In other cases, malware could even start to download once you click. Be cautious!

How to Stay Safe from Deepfake Scams

Below are some best practices for identifying deepfake scams:

- Be skeptical with whoever is on the video call with you, e.g., ask questions that only the real person would know the answers to.

- If the caller is someone you’ve never met before, stay extra suspicious!

- For your crush online — are there any anomalies such as weird lip syncing, stiff facial or body movements, irregular shadows, interrupted audio… etc?

- For job interviews — is this job offering open to the public? Is the company trustworthy? Are you being contacted via their official channels?

- For investment opportunities — is the guy in the video repeating himself? Are there unmatching audio and lip movements? Be extra suspicious of out-of-the-blue requests for money or personal information

- Trust your instincts. If something feels off, end the video call and contact the real person directly, ideally using another method of communication (a phone call, for example).

- Use technology to help spot deepfakes! Consider using Trend Micro Deepfake Inspector, our newly launched FREE tool, to help you verify the identities of people on video calls in real time, ensuring they are not using deepfake technology to alter their appearance.

Designed for live video calls on Windows PCs, it scans for AI face-swapping content in real time, alerting you if you’re talking with a potential deepfake scammer and protecting you from harm. To learn more about Deepfake Inspector and how it can help you spot people using AI to alter their appearance on video calls, click the button below.

If you’ve found this article an interesting or helpful read, please SHARE it with friends and family to help keep the online community secure and protected. Also, please consider clicking the LIKE button below.

1 Comments

- By nadine pemberton | August 28, 2024