Ever since ChatGPT was released to the public several months ago, the pros and cons of AI has been the talk of the online town. Here at the Trend Micro news blog, we’ve been covering the ins and outs of developments as they happen: from the recent ChatGPT data breach to My AI on Snapchat — not to mention the impact of chatbots and AI on education. In this article, we thought we’d provide a run-down of recent AI-based threats that we’ve been tracking.

AI Voice Cloning

AI voice cloning is being used by scammers to impersonate people and deceive victims into giving away money or sensitive information. Using a short video clip of someone’s voice from social media, scammers can easily create a voice clone of that person and then call their family/friends/colleagues and impersonate them.

Scammers have recently used AI voice generator technology to make it appear as if they have kidnapped children and demanded large ransoms from distraught parents. Earlier this month it was reported that scammers cloned the voice of a 15-year-old girl from Arizona, called up her mom pretending to be her, and threatened to harm her before demanding a $1 million ransom. Thankfully, the mother was able to confirm that her daughter was safe — but the example goes to show the lengths scammers can go with AI technology.

Another common AI voice scam is the “grandparent scam“, in which scammers use a grandchild’s cloned voice to extract money and personal credentials from the deceived and worried grandparent. The Federal Trade Commission (FTC) has warned about other voice cloning scams here. If you experience one of these scams, you should report any incidents to the FTC at ReportFraud.ftc.gov.

Red Flags to Watch Out For:

- The use of urgent language and demands for money.

- The caller asks you to send cryptocurrency, buy gift cards, or pay them using some other untraceable method.

- Suspiciously good voice recording quality and/or no discernable background noise.

- Inconsistencies in the story or information provided.

- The kidnapped person not being able to tell you your pre-arranged safe word (if you had previously set one up together).

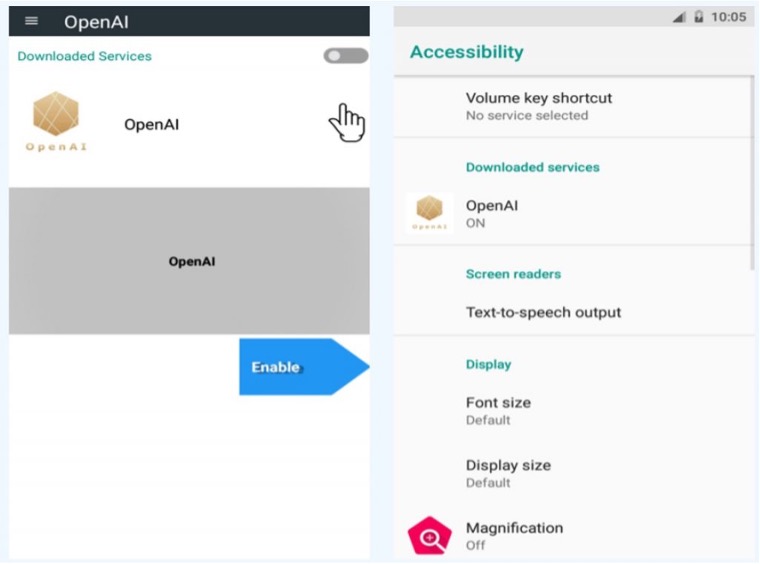

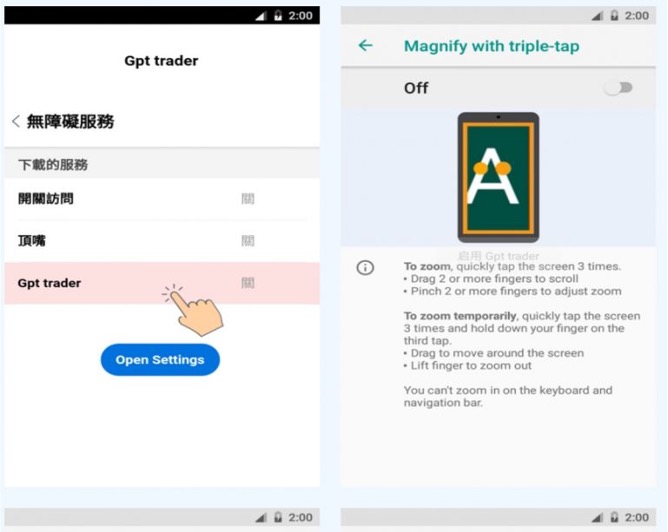

Phishing Ads for Fake AI Software Tools

We have previously reported on fake ChatGPT apps and phishing websites. Scammers run ads for ChatGPT and other AI tools and software on social media sites and search engines. If you click on a malicious link, you could end up on a website that will download malware onto your device. Some ads may even take you to real software/websites but download the malware through a “backdoor” — in which case you won’t even realize. Once this happens, you could end up the victim of ransomware, or you’ll be the victim of identity theft and monetary fraud.

Four Top Tips:

- Never click on unknown software ads.

- Always try to go directly to the website via your search engine.

- If you don’t know the website address, search for it.

- Use reliable antivirus software and keep it up to date – give Trend Micro Maximum Security a try!

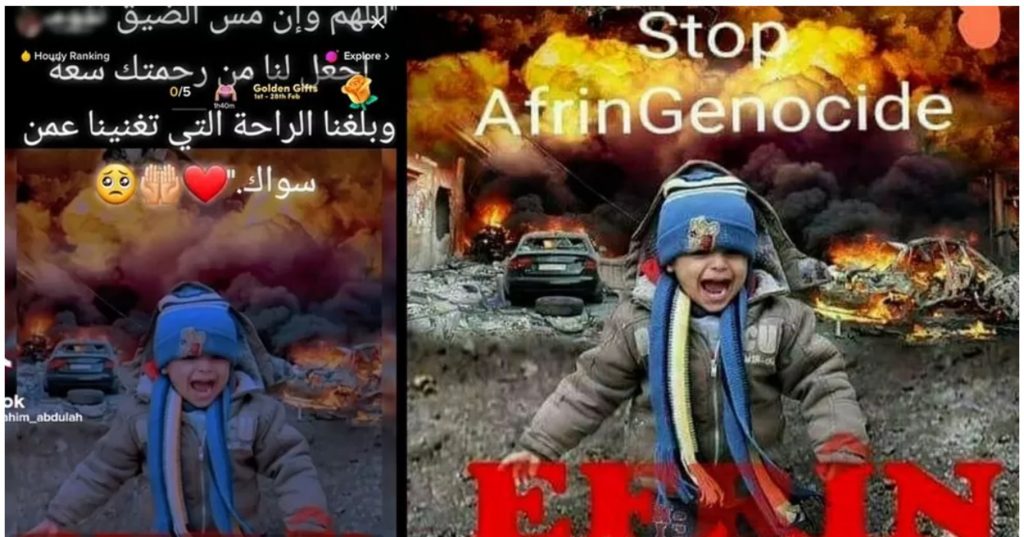

AI-Generated Images Used for Scams

Back in February, we reported on the despicable scammers who were using AI-generated images to make a profit from the Turkey-Syria earthquake. They would post fake images of children as a way to appeal to people’s sympathy and generosity — then they’d pocket the money, and maybe the victims’ credentials too.

The first image was generated by AI. One clue is the fact that it’s messed up and given the firefighter SIX FINGERS! It has also inexplicably paired a Greek firefighter with a Turkish child. The second is an AI-edited picture of a child victim of the Afrin campaign during a phase of the Syrian Civil War. In response to such images, TikTok stated:

“We are deeply saddened by the devastating earthquakes in Turkey and Syria and are contributing to aid earthquake relief efforts … We’re also actively working to prevent people from scamming and misleading community members who want to help.”

Other examples of AI Images include:

- Jos Avery, a popular Instagram “photographer”, had been fooling his fans with images made by AI.

- AI images being used in romance scams — as well as disinformation campaigns, for example, of Donald Trump being arrested or the Pope wearing outlandish outfits.

- Celebrated German artist, Boris Eldagsen, won the Sony World Photography Award with an AI-generated image.

How to Protect Yourself Against AI Scams

- Practice skepticism when you come across images and content online. Do your research!

- Be cautious when receiving unexpected phone calls or messages.

- If a call is suspicious, end the call and contact your friend/family member/colleague directly or call someone else who can confirm the situation.

- Be skeptical when asked for money via cryptocurrency, gift cards, etc.

- Don’t overshare on social media because it can enable scammers to add believability to their lies.

- If you suspect that you are being scammed, report it to the FTC immediately.

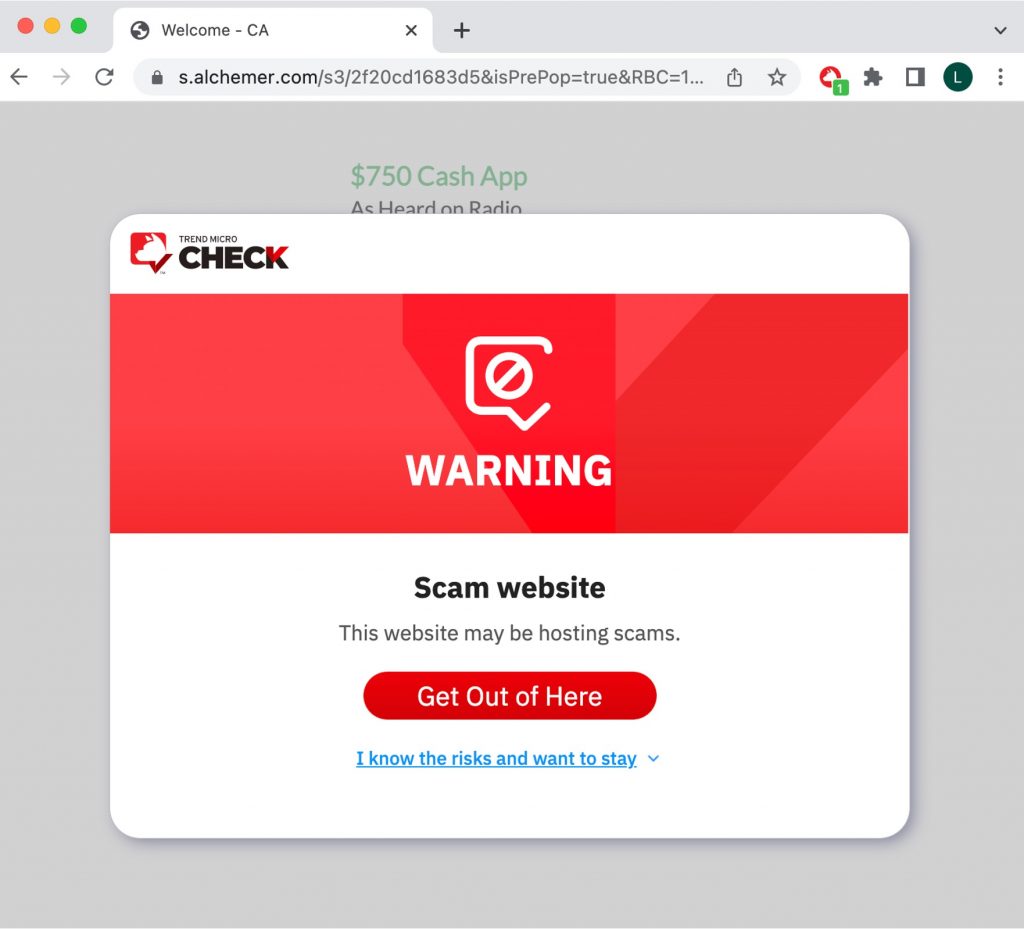

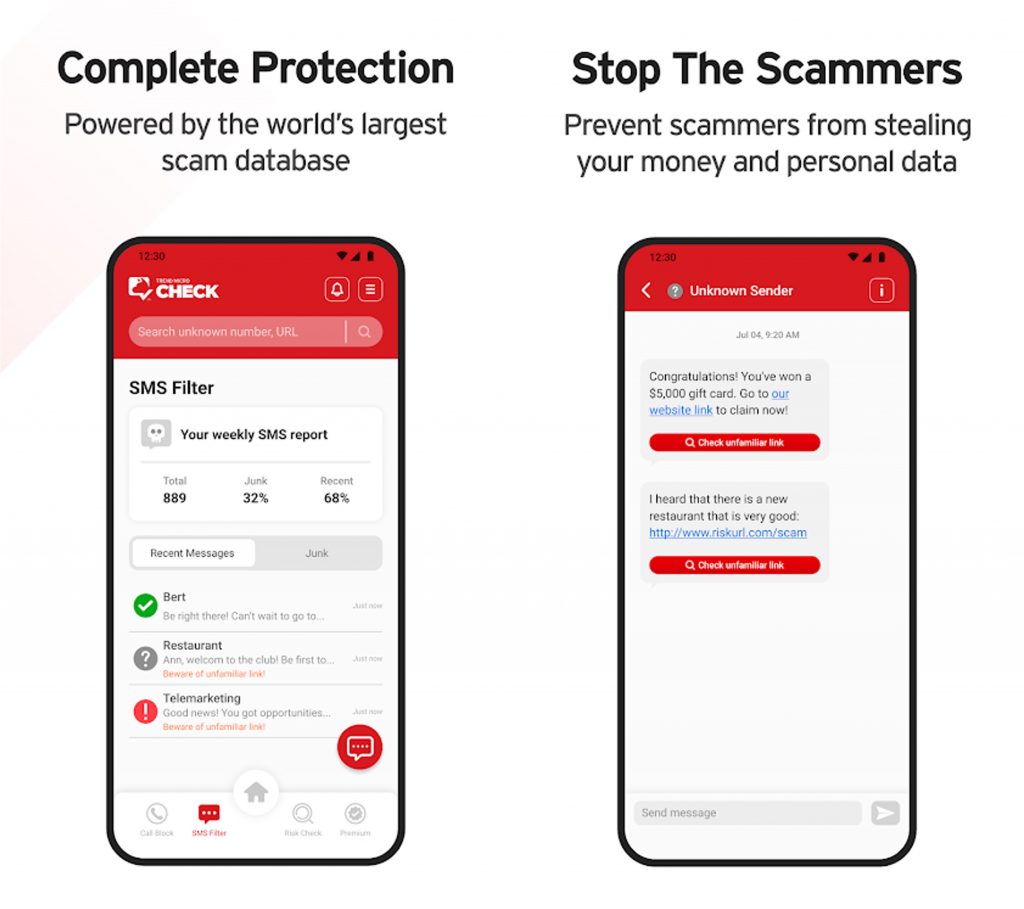

- NEVER use links or buttons from unknown sources! Use Trend Micro Check to detect scams with ease: Trend Micro Check is an all-in-one browser extension and mobile app for detecting scams, phishing attacks, malware, and dangerous links — and it’s FREE!

It will block unwanted phone calls, annoying spam text messages, and protect you against malicious links in messages on apps such as WhatsApp, Telegram, and Tinder.

Check out this page for more information on Trend Micro Check. And as ever, if you’ve found this article an interesting and/or helpful read, please do SHARE it with friends and family to help keep the online community secure and protected. And don’t forget to leave a like and a comment!

1 Comments

- By Sharon Moist | May 25, 2023

![[Alert] AI-Based Threats and Scams to Watch Out For](/_next/image/?url=https%3A%2F%2Fnews.trendmicro.com%2Fapi%2Fwp-content%2Fuploads%2F2023%2F05%2FiStock-1476755720.jpg&w=3840&q=75)