The rapid advancement of artificial intelligence (AI) has revolutionized many sectors, from healthcare to finance. However, this technological progress has a dark side: cybercriminals are leveraging AI to create more sophisticated and convincing scams. Powered by AI, scams are becoming easier to create and distribute, meaning that scammers can do more with less; at the same time, there are also many more platforms to target. As we navigate this new landscape, it’s crucial to understand the evolving threats and how to protect ourselves.

AI Analysis of Behavior

One of the most insidious uses of AI in scams is the analysis of potential victims’ online activities and interests. This enables cybercriminals to craft highly personalized and convincing phishing attempts and other targeted threats. AI tools can rapidly analyze large amounts of publicly available data from social media profiles, forum posts, purchasing history, and other online sources to build detailed profiles of individuals. Machine learning algorithms can identify patterns in a person’s online behavior, such as topics they frequently discuss, sites they visit, or times they are most active online.

Natural language processing allows AI to analyze the writing style and language used by potential victims, enabling scammers to craft messages that mimic their communication patterns. Based on the analyzed data, AI can predict what types of scams or phishing lures an individual may be most susceptible to. This level of personalization makes scams much more convincing and harder to detect. For example, a phishing email could reference the target’s recent purchases, use similar language to their friends, and relate to their specific interests and hobbies.

AI Voice Cloning

AI voice cloning has emerged as a troubling tool in the scammer’s arsenal. Using just a short audio clip of someone’s voice, often sourced from social media, scammers can create convincing voice clones to impersonate friends, family members, or authority figures. One distressing application of this technology is in fake kidnapping scams. Criminals use AI-generated voices to make it appear as if they’ve abducted a loved one, demanding ransom from distraught family members. In a recent case, scammers cloned the voice of a 15-year-old girl from Arizona, called her mother pretending to be her, and threatened harm unless a $1 million ransom was paid.

Another common tactic is the “grandparent scam,” where scammers use a cloned voice of a grandchild to extract money or personal information from worried grandparents. The Federal Trade Commission (FTC) has issued warnings about these and other voice cloning scams, urging victims to report incidents at ReportFraud.ftc.gov.

Red flags to watch out for include:

- Urgent demands for money

- Requests for payment via cryptocurrency or gift cards

- Suspiciously good voice quality or lack of background noise

- Inconsistencies in the story

- Inability to provide pre-arranged safe words

Deepfake Scams

Deepfake technology, which uses AI to create or manipulate video and audio content, has opened up new avenues for scammers. Initially developed for entertainment purposes, deepfake technology has been co-opted by malicious actors to create convincing fake videos and images.

Celebrities are frequent targets due to their public profiles and cultural capital, but ordinary individuals are also at risk. Cybercriminals can use deepfakes during video calls to impersonate anyone: whether it’s a friend, family member, potential partner, or a job interview online, video calls provide a perfect opportunity for the scammer to trick someone into giving money or personal information. Deepfakes can also be used to create fake pornographic content, spread misinformation, or manipulate public opinion. For instance, earlier this year, a deepfake video circulated showing a fake Taylor Swift supposedly teaming up with Le Creuset for a giveaway campaign.

The dangers of deepfakes extend beyond embarrassment. They can irreparably damage reputations, spread misinformation, destroy relationships, lead to job losses, and become tools for blackmail. According to reports, nearly 4,000 celebrities have been victims of deepfake pornography, and journalists have been targeted to create fake news stories and interviews.

How to spot deepfakes:

- Look for unnatural facial movements

- Check for inconsistent lighting or odd background details

- Pay attention to lip synchronization

- Verify the source of the content

AI Used in Romance Scams

AI has made romance scams more sophisticated and efficient. Romance scammers use A[L(2] I, primarily in deepfake creation, for three primary purposes:

- Automated messaging: Many scammers use AI chatbots to interact with victims. These chatbots can engage in conversations, mimic human behavior, and build rapport with victims.

- Profile generation: AI algorithms can generate fake profiles on dating websites and social media platforms. These profiles use stolen or AI-generated pictures and information to appear genuine.

- Language generation: AI can generate convincing messages tailored to specific victims based on their profiles and previous interactions, helping scammers to personalize their approach.

These AI-powered techniques allow scammers to operate multiple romance scams simultaneously, increasing their reach and potential for financial gain.

AI in Investment and Giveaway Scams

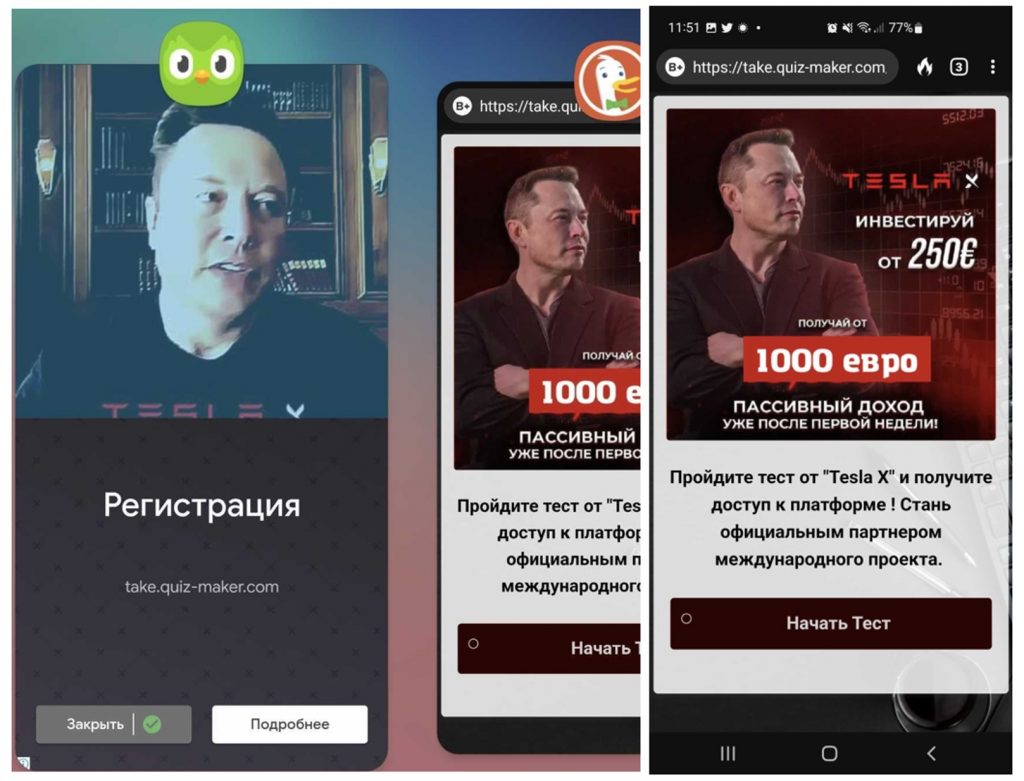

Deepfakes are also commonly used for fake celebrity endorsements in investment scams. For example, deepfake videos regularly circulate featuring Elon Musk giving away crypto tokens online. These deepfakes advertise too-good-to-be-true investment opportunities and lead to malicious websites.

Even legitimate and popular mobile applications aren’t immune to these scams. For instance, an ad featuring a deepfake Elon Musk promoting “financial investment opportunities” was seen on Duolingo. Clicking on such ads can lead to fraudulent investment schemes or even trigger malware downloads.

AI-Generated Images and Fake News

AI’s ability to generate realistic images has been exploited by scammers in various ways. One noteworthy example occurred in the aftermath of the Turkey-Syria earthquake last year, as scammers used AI-generated images of children to appeal to people’s sympathy and generosity in fake charity scams.

AI is also being used to create entire fake news websites. These “newsbots” leverage AI to generate articles in multiple languages, often designed to generate ad revenue for the site owners. Because AI can create content so quickly, these sites can publish numerous AI-generated articles in a short time, each filled with ads. This flood of fake content makes it increasingly difficult for users to distinguish between real and fabricated news.

For online content and images:

- Check the source of the content

- Look for verified accounts or established news outlets

- Be cautious about clicking on links or downloading files from unfamiliar sources

- Use fact-checking websites to verify questionable claims or news stories

Protecting Yourself in the Age of AI Scams

As AI-powered scams become more sophisticated, it’s crucial to stay vigilant and take steps to protect yourself. Follow these ten best practices:

- Limit the personal information you share publicly online

- Be wary of unsolicited messages, even if they seem personalized

- Enable strong privacy settings on social media accounts

- Use unique, complex passwords for each online account

- Be cautious about granting access to your data to third-party apps and quizzes

- Consider using privacy-focused search engines and browsers

- Practice skepticism when you come across images and content online

- If a call or message seems suspicious, verify through official channels before taking any action

- Be skeptical of requests for money via cryptocurrency, gift cards, or other untraceable methods

- Report suspected scams to the appropriate authorities, such as the FTC

Trend Micro Check

With the increasing number and sophistication of scams, staying one step ahead is more crucial than ever. Unfortunately, antivirus software alone isn’t enough. Introducing the newly updated Trend Micro Check! Available for both Android and iOS, Trend Micro Check offers comprehensive protection from deceptive phishing scams, scam and spam text messages, deepfakes, and more:

- Scam Check: Instantly analyze emails, texts, URLs, screenshots, and phone numbers with our AI-powered scam detection technology. Stay secure and scam-free.

- SMS Filter & Call Block: Say goodbye to unwanted spam and scam calls and messages. Minimize daily disruptions and reinforce your defenses against phishing.

- Deepfake Scan: Detect deepfakes in real-time during video calls, alerting you if anyone is using AI face-swapping technology to alter their appearance.

- Web Guard: Surf the web safely, protected from malicious websites and annoying ads.

To download Trend Micro Check or to learn more, click the button below.